Table of Contents

As industries evolve with the changing trends in technologies and customer behaviour, DevOps will emerge as an essential practice for organizations to deliver quality user experiences with efficient time-to-market. Now, it is not just about automation but has gone beyond imagination. Top technologies like Artificial Intelligence, Cloud computing, and Chatbots have taken center stage in every industry by integrating with DevOps culture.

So let us quickly understand how DevOps will influence the future of various industries in 2024 and beyond.

IT Industry

DevOps can simplify and automate various operations with the power of comprehensive tools and technologies. The future of DevOps in the IT industry is bright and ever-shining. As DevOps is becoming more popular, companies are looking forward to hiring top DevOps professionals who can coordinate development processes and operations. These DevOps engineers are expected to automate tasks, monitor, and control the complete process starting from development to deployment. Skilled engineers will be hired to identify forthcoming challenges on a proactive basis and handle the technical and non-technical aspects of a software development cycle.

Healthcare

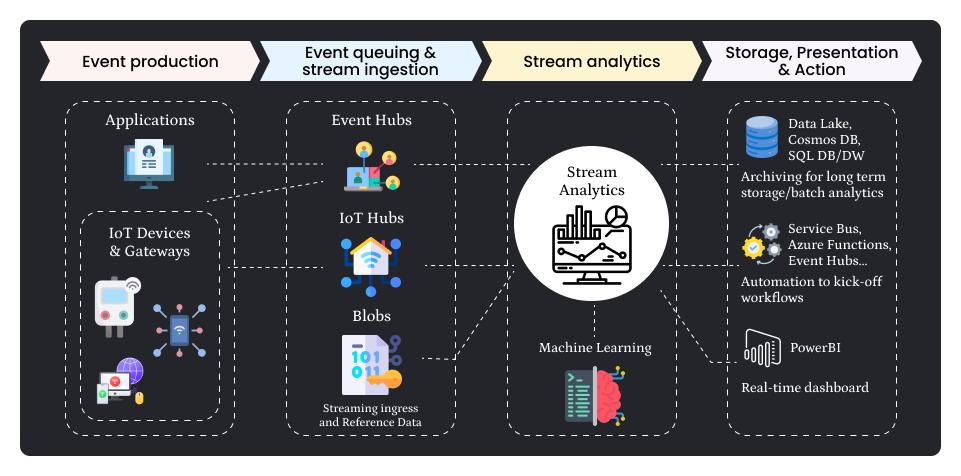

Implementing DevOps in the healthcare industry will be observed in the form of comprehensive integration of AI and machine learning. By assimilating these technologies with DevOps, the industry will be able to streamline processes and change the way professionals analyze healthcare data. There will be a range of new features that will enhance diagnostic accuracy and create better treatment strategies.

Telecommunications

A lot of telecom companies have shifted to cloud-based networks in recent years. In the future, there will be less need for on-premise infrastructures and all network services will scale up rapidly. The power of cloud computing and the practice of DevOps will shape the future of telecommunications in a new way. Telecom companies will practice DevOps to reduce costs, minimize manual interventions, reduce waste of resources, and improve resource utilization. As DevOps tools and technologies will take over bringing in automation in this industry as well, several telecommunication giants will shift towards efficient resource management solutions that are provided by DevOps thereby delivering better quality services.

Hospitality

Hospitality industry is one of the ever-evolving industries that has a promising future in the years to come. By practicing DevOps, the hotel industry and its big companies can deliver high-quality services and can automate regular workflows, allowing the staff to focus on building sustainable relationships with customers and stakeholders. Also, by integrating Artificial Intelligence with DevOps, hotels can predict consumer behaviour, create data analytics, and generate more revenues.

Insurance

The insurance sector has already begun adopting DevOps practices through conventional patterns by automating several processes that are time-consuming or difficult for humans to function with. Starting from claims processing to underwriting, DevOps implementation can automate workflows, reducing human effort and promoting productivity within shorter timelines. In the future, insurance companies will provide core services like websites, and mobile applications, at a much higher level than available at present. From making premium payments to settling claims, DevOps will improve several processes.

Banking

The banking and finance industry has already adopted DevOps culture into their workflows and operational systems. The possibilities of obtaining faster feedback loops and frequent deployments through DevOps have enabled banks to release software quickly and make iterations in between without disrupting the ongoing services. Banks are relying heavily on DevOps for IT infrastructure as they are supposed to adhere to strict rules and regulations like the Payment Card Industry Data Security Standard (PCI-DSS).

Moreover, several traditional banks are now realising to improve their pace for better market reach. As DevOps offers agile methodologies for quick deployment, banks can launch new features and stay ahead of their competitors. They can also improve their work efficiency by reducing manual processes, cutting down siloed teams, and controlling the impact of legacy systems. With DevOps, now and in the future, banks can deliver new products and services at a much faster pace than anyone could ever imagine.

Inventory Management

DevOps has widened its influence from using a specific set of tools to helping big enterprises transform their businesses with innovative functions and activities. This includes product development, customer service, marketing, and sales alos. There are other areas where DevOps has created a revolution in the field of inventory such as IT operations management, quality assurance, project management, security engineering, and human resources.

Manufacturing

It has been a while manufacturing industry leveraged the benefits of DevOps practices to improve their production processes and reduce errors. Several DevOps tools and technologies have enabled the automation of various workflows enhancing resource maximization and investment utilization. Also, with 360-degree infrastructure automation way ahead, the manufacturing sector can simplify processes even though there is a gamut of complex hardware, software, and firmware systems. With routine testing and regular bug fixing DevOps engineers are ensuring to take the productivity levels of the manufacturing industry to the next level. Manufacturers can build scalable and robust environments that can produce quality products faster. In the future, DevOps is sure to provide faster mean time to recovery (MTTR), reducing downtime and providing faster response to repair and recoveries.

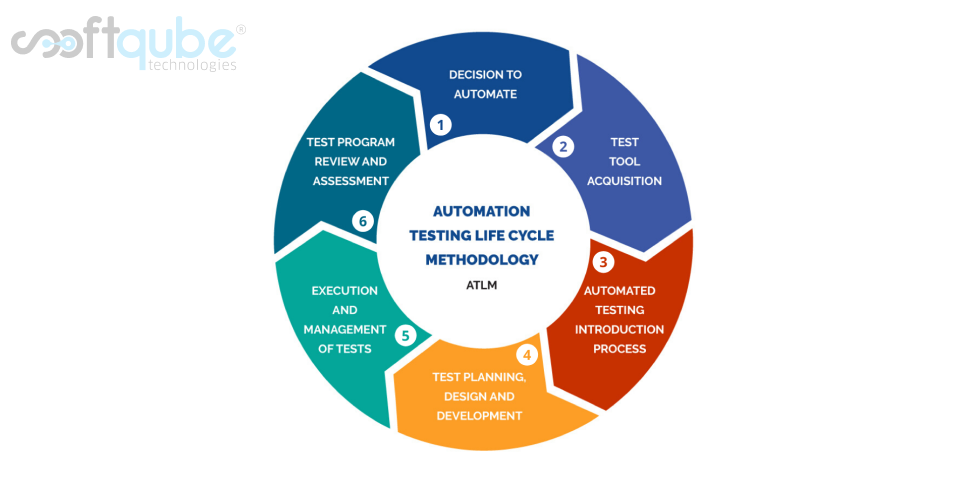

Top Future Trends of DevOps That Will Influence Industries

| Trends | Description |

| Automation and Artificial Intelligence | Problem identification and offering quick and effective solutions. |

| Enhanced Collaboration | Promotes in-depth knowledge of team tasks and responsibilities |

| Security Automation | Provides a fully automated system to teams for maintaining app security |

| Implementation of DevOps across all industries | All types and sizes of industries will soon adopt DevOps technologies |

| CI/CD | Organizations will deliver software constantly, rapidly, and with reliability through automation |

| Cloud-native architecture | Cloud, DevOps, and software principles will combine to build innovative products and features. |

| Microservices Architecture | Complex applications will be broken down into small services, making tasks easier and deployable |

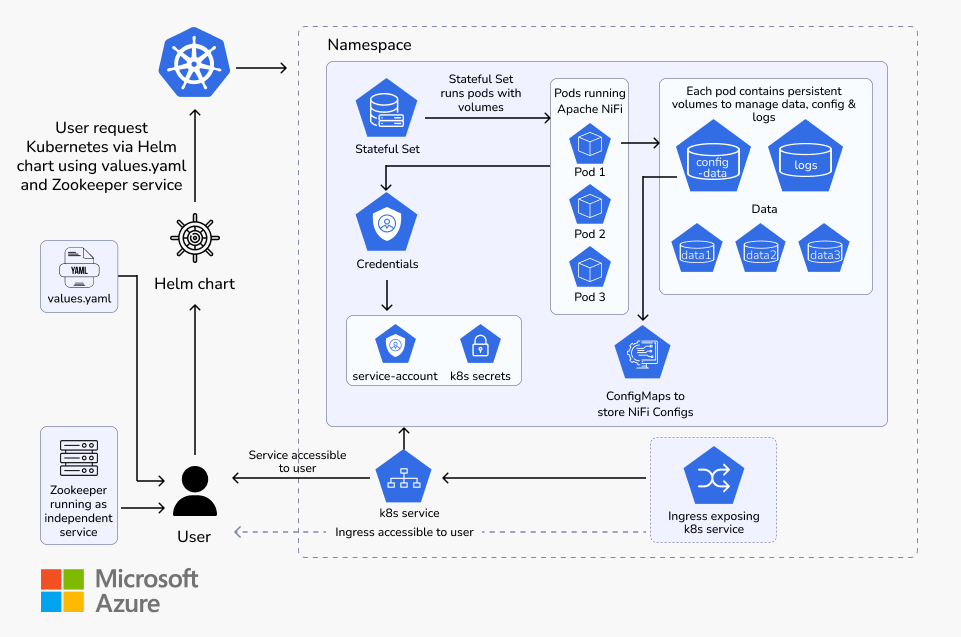

| Kubernetes and container orchestration | Effective management of containerized apps will be allocated across various deployment platforms |

Conclusion

DevOps has been thriving for a while and its future looks promising for all kinds of industries. DevOps is continuously implementing several tools that foster quick delivery, automation, and easy collaboration for businesses. Its capacity to evolve and transform as per the changing trends ensures its acceptability and implementation by small and large companies in the future. The Future of DevOps is full of possibilities for organizations. The real challenge lies in its implementation strategy to get a favorable outcome. If you are looking for some robust solutions for your business workflows and want to reduce time-to-market for your product delivery and deployment, choose the right partner for your DevOps journey. Softqube Technologies has the best DevOps engineers who have proven their skills and expertise by transforming several businesses into profitable hubs.

FAQs

How can DevOps help my business?

Businesses will be more agile and efficient in their operations through the implementation of DevOps automation. The development and operations department will closely collaborate ensuring continuous delivery and software maintenance with quick response to issues.

How can I implement DevOps?

To implement a DevOps strategy into your business, you must follow the below steps: Find a cloud service provider, design architecture, create a CI/CD pipeline, use IAC for automation of various areas, ensure security and compliance, and lastly implement support, maintenance, and incident response.

What is the next big transformation through DevOps?

The future of DevOps will be more about teams working together to build better products efficiently. It will be less about developers and operations teams and there will be just one team working with two roles. Hence, developers will have to play a larger role in introducing and practicing new technologies and innovations in their development processes.

How far will DevOps exist?

DevOps has been around for a long time. It will be here for many years to come since it has become very popular among organizations. Because, the DevOps approach is all about transforming organizations significantly in terms of product delivery and maintaining high quality, everything at speed and with agility.